Goal

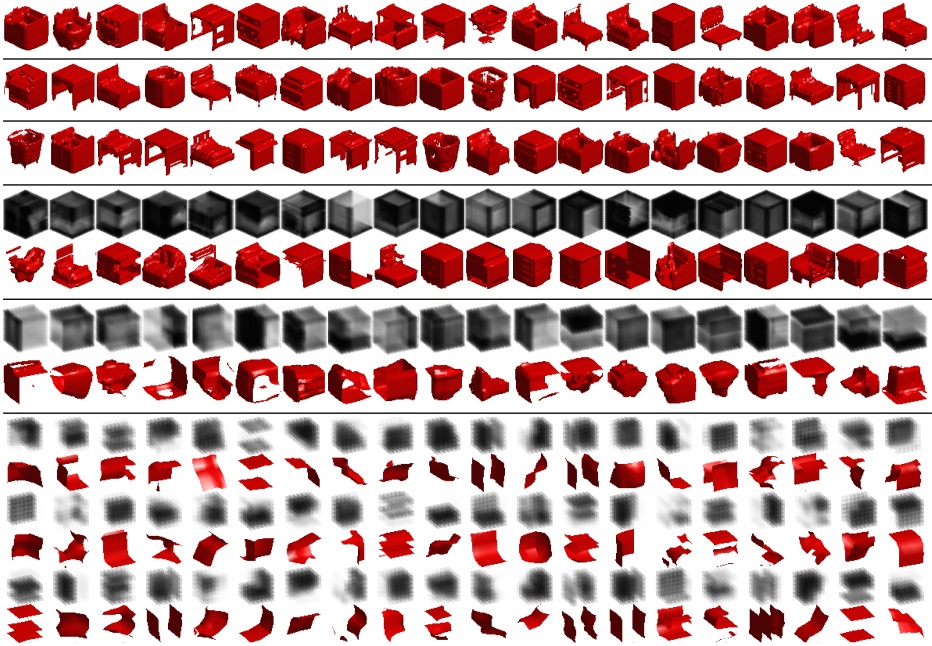

The goal of the Princeton ModelNet project is to provide researchers in computer vision, computer graphics, robotics and cognitive science,

with a comprehensive clean collection of 3D CAD models for objects.

To build the core of the dataset, we compiled a list of the most common object categories in the world, using the statistics obtained from the SUN database.

Once we established a vocabulary for objects, we collected 3D CAD models belonging to each object category using online search engines by querying for each object category term.

Then, we hired human workers on Amazon Mechanical Turk to manually decide whether each CAD model belongs to the specified cateogries,

using our in-house designed tool with quality control.

To obtain a very clean dataset,

we choose 10 popular object categories, and manually deleted the models that did not belong to these categories.

Furthermore, we manually aligned the orientation of the CAD models for this 10-class subset as well.

We provide both the 10-class subset and the full dataset for download.

Download 10-Class Orientation-aligned Subset

ModelNet10.zip: this ZIP file contains CAD models from the 10 categories used to train the deep network in our 3D deep learning project. Training and testing split is included in the file.

The CAD models are completely cleaned inhouse, and the orientations of the models (not scale) are manually aligned by ourselves.

Download 40-Class Subset

ModelNet40.zip: this ZIP file contains CAD models from the 40 categories used to train the deep network in our 3D deep learning project. Training and testing split is included in the file.

The CAD models are completely cleaned inhouse by ourselves.

[New!] Aligned 40-Class Subset

Now You can find Aligned 40-Class ModelNet models Here. This data is provided by N. Sedaghat, M. Zolfaghari, E. Amiri and T. Brox authors of Orientation-boosted Voxel Nets for 3D Object Recognition [8].

The CAD models are in Object File Format (OFF).

We also provide Matlab functions to read and visualize OFF files in our Princeton Vision Toolkit (PVT).

ModelNet Benchmark Leaderboard

Please email Shuran Song to add or update your results.

In your email please provide following information in this format:

Algorithm Name, ModelNet40 Classification, ModelNet40 Retrieval, ModelNet10 Classification, ModelNet10 Retrieval

Author list, Paper title, Conference. Link to paper.

Example:

3D-DescriptorNet, -, -, -,92.4%,-

Jianwen Xie, Zilong Zheng, Ruiqi Gao, Wenguan Wang, Song-Chun Zhu, and Ying Nian Wu, Learning Descriptor Networks for 3D Shape Synthesis and Analysis. CVPR 2018, http://...

| Algorithm | ModelNet40

Classification

(Accuracy) | ModelNet40

Retrieval

(mAP) | ModelNet10

Classification

(Accuracy) | ModelNet10

Retrieval

(mAP) |

|---|

| RS-CNN[63] | 93.6% | - | - | - |

| LP-3DCNN[62] | 92.1% | - | 94.4% | - |

| LDGCNN[61] | 92.9% | - | - | - |

| Primitive-GAN[60] | 86.4% | - | 92.2% | - |

| 3DCapsule [59] | 92.7% | - | 94.7% | - |

| 3D2SeqViews [58] | 93.40% | 90.76% | 94.71% | 92.12% |

| OrthographicNet [57] | - | - | 88.56% | 86.85% |

| Ma et al. [56] | 91.05% | 84.34% | 95.29% | 93.19% |

| MLVCNN [55] | 94.16% | 92.84% | - | - |

| iMHL [54] | 97.16% | - | - | - |

| HGNN [53] | 96.6% | - | - | - |

| SPNet [52] | 92.63% | 85.21% | 97.25% | 94.20% |

| MHBN [51] | 94.7 | - | 95.0 | - |

| VIPGAN [50] | 91.98 | 89.23 | 94.05 | 90.69 |

| Point2Sequence [49] | 92.60 | - | 95.30 | - |

| Triplet-Center Loss [48] | - | 88.0% | - | - |

| PVNet[47] | 93.2% | 89.5% | - | - |

| GVCNN[46] | 93.1% | 85.7% | - | - |

| MLH-MV[45] | 93.11% | | 94.80% | |

| MVCNN-New[44] | 95.0% | | | |

| SeqViews2SeqLabels[43] | 93.40% | 89.09% | 94.82% | 91.43% |

| G3DNet[42] | 91.13% | | 93.1% | |

| VSL [41] | 84.5% | | 91.0% | |

| 3D-CapsNets[40] | 82.73% | 70.1% | 93.08% | 88.44% |

| KCNet[39] | 91.0% | | 94.4% | |

| FoldingNet[38] | 88.4% | | 94.4% | |

| binVoxNetPlus[37] | 85.47% | | 92.32% | |

| DeepSets[36] | 90.3% | | | |

| 3D-DescriptorNet[35] | | | 92.4% | |

| SO-Net[34] | 93.4% | | 95.7% | |

| Minto et al.[33] | 89.3% | | 93.6% | |

| RotationNet[32] | 97.37% | | 98.46% | |

| LonchaNet[31] | | | 94.37 | |

| Achlioptas et al. [30] | 84.5% | | 95.4% | |

| PANORAMA-ENN [29] | 95.56% | 86.34% | 96.85% | 93.28% |

| 3D-A-Nets [28] | 90.5% | 80.1% | | |

| Soltani et al. [27] | 82.10% | | | |

| Arvind et al. [26] | 86.50% | | | |

| LonchaNet [25] | | | 94.37% | |

| 3DmFV-Net [24] | 91.6% | | 95.2% | |

| Zanuttigh and Minto [23] | 87.8% | | 91.5% | |

| Wang et al. [22] | 93.8% | | | |

| ECC [21] | 83.2% | | 90.0% | |

| PANORAMA-NN [20] | 90.7% | 83.5% | 91.1% | 87.4% |

| MVCNN-MultiRes [19] | 91.4% | | | |

| FPNN [18] | 88.4% | | | |

| PointNet[17] | 89.2% | | | |

| Klokov and Lempitsky[16] | 91.8% | | 94.0% | |

| LightNet[15] | 88.93% | | 93.94% | |

| Xu and Todorovic[14] | 81.26% | | 88.00% | |

| Geometry Image [13] | 83.9% | 51.3% | 88.4% | 74.9% |

| Set-convolution [11] | 90% | | | |

| PointNet [12] | | | 77.6% | |

| 3D-GAN [10] | 83.3% | | 91.0% | |

| VRN Ensemble [9] | 95.54% | | 97.14% | |

| ORION [8] | | | 93.8% | |

| FusionNet [7] | 90.8% | | 93.11% | |

| Pairwise [6] | 90.7% | | 92.8% | |

| MVCNN [3] | 90.1% | 79.5% | | |

| GIFT [5] | 83.10% | 81.94% | 92.35% | 91.12% |

| VoxNet [2] | 83% | | 92% | |

| DeepPano [4] | 77.63% | 76.81% | 85.45% | 84.18% |

| 3DShapeNets [1] | 77% | 49.2% | 83.5% | 68.3% |

[1] Z. Wu, S. Song, A. Khosla, F. Yu, L. Zhang, X. Tang and J. Xiao. 3D ShapeNets: A Deep Representation for Volumetric Shapes. CVPR2015.

[2] D. Maturana and S. Scherer. VoxNet: A 3D Convolutional Neural Network for Real-Time Object Recognition. IROS2015.

[3] H. Su, S. Maji, E. Kalogerakis, E. Learned-Miller. Multi-view Convolutional Neural Networks for 3D Shape Recognition. ICCV2015.

[4] B Shi, S Bai, Z Zhou, X Bai. DeepPano: Deep Panoramic Representation for 3-D Shape Recognition. Signal Processing Letters 2015.

[5] Song Bai, Xiang Bai, Zhichao Zhou, Zhaoxiang Zhang, Longin Jan Latecki. GIFT: A Real-time and Scalable 3D Shape Search Engine. CVPR 2016.

[6] Edward Johns, Stefan Leutenegger and Andrew J. Davison. Pairwise Decomposition of Image Sequences for Active Multi-View Recognition CVPR 2016.

[7] Vishakh Hegde, Reza Zadeh 3D Object Classification Using Multiple Data Representations.

[8] Nima Sedaghat, Mohammadreza Zolfaghari, Thomas Brox Orientation-boosted Voxel Nets for 3D Object Recognition. BMVC

[9] Andrew Brock, Theodore Lim, J.M. Ritchie, Nick Weston Generative and Discriminative Voxel Modeling with Convolutional Neural Networks.

[10] Jiajun Wu, Chengkai Zhang, Tianfan Xue, William T. Freeman, Joshua B. Tenenbaum. Learning a Probabilistic Latent Space of Object Shapes via 3D Generative-Adversarial Modeling. NIPS 2016

[11] Siamak Ravanbakhsh, Jeff Schneider, Barnabas Poczos. Deep Learning with sets and point clouds

[12] A. Garcia-Garcia, F. Gomez-Donoso†, J. Garcia-Rodriguez, S. Orts-Escolano, M. Cazorla, J. Azorin-Lopez. PointNet: A 3D Convolutional Neural Network for Real-Time Object Class Recognition

[13] Ayan Sinha, Jing Bai, Karthik Ramani. Deep Learning 3D Shape Surfaces Using Geometry Images ECCV 2016

[14] Xu Xu and Sinisa Todorovic. Beam Search for Learning a Deep Convolutional Neural Network of 3D Shapes

[15] Shuaifeng Zhi, Yongxiang Liu, Xiang Li, Yulan Guo Towards real-time 3D object recognition: A lightweight volumetric CNN framework using multitask learning Computers and Graphics (Elsevier)

[16] Roman Klokov, Victor Lempitsky Escape from Cells: Deep Kd-Networks for The Recognition of 3D Point Cloud Models

[17] Charles R. Qi, Hao Su, Kaichun Mo, and Leonidas J. Guibas. PointNet: Deep Learning on Point Sets for 3D Classification and Segmentation. CVPR 2017.

[18] Yangyan Li, Soeren Pirk, Hao Su, Charles R. Qi, and Leonidas J. Guibas. FPNN: Field Probing Neural Networks for 3D Data. NIPS 2016.

[19] Charles R. Qi, Hao Su, Matthias Niessner, Angela Dai, Mengyuan Yan, and Leonidas J. Guibas. Volumetric and Multi-View CNNs for Object Classification on 3D Data. CVPR 2016.

[20] K. Sfikas, T. Theoharis and I. Pratikakis. Exploiting the PANORAMA Representation for Convolutional Neural Network Classification and Retrieval. 3DOR2017.

[21] Martin Simonovsky, Nikos Komodakis Dynamic Edge-Conditioned Filters in Convolutional Neural Networks on Graphs.

[22] Chu Wang, Marcello Pelillo, Kaleem Siddiqi. Dominant Set Clustering and Pooling for Multi-View 3D Object Recognition. BMVC 2017.

[23] Pietro Zanuttigh and Ludovico Minto Deep Learning for 3D Shape Classification from Multiple Depth Maps ICIP 2017.

[24] Yizhak Ben-Shabat, Michael Lindenbaum, Anath Fischer 3D Point Cloud Classification and Segmentation using 3D Modified Fisher Vector Representation for Convolutional Neural Networks arXiv 2017.

[25] F. Gomez-Donoso, A. Garcia-Garcia, J. Garcia-Rodriguez, S. Orts-Escolano, M. Cazorla LonchaNet: A sliced-based CNN architecture for real-time 3D object recognition Neural Networks (IJCNN), 2017.

[26] Varun Arvind, Anthony Costa, Marcus Badgeley, Samuel Cho, Eric Oermann Wide and deep volumetric residual networks for volumetric image classification arXiv 2017.

[27] Amir Arsalan Soltani, Haibin Huang, Jiajun Wu, Tejas D. Kulkarni, Joshua B. Tenenbaum Synthesizing 3D Shapes via Modeling Multi-View Depth Maps and Silhouettes with Deep Generative Networks CVPR 2017

[28] Mengwei Ren, Liang Niu, Yi Fang 3D-A-Nets: 3D Deep Dense Descriptor for Volumetric Shapes with Adversarial Networks

[29] K Sfikas, I Pratikakis and T Theoharis, Ensemble of PANORAMA-based Convolutional Neural Networks for 3D Model Classification and Retrieval Computers and Graphics

[30] Panos Achlioptas, Olga Diamanti, Ioannis Mitliagkas, Leonidas Guibas. Learning Representations and Generative Models for 3D Point Clouds, arXiv 2017

[31] F. Gomez-Donoso, A. Garcia-Garcia, J. Garcia-Rodriguez, S. Orts-Escolano, M. Cazorla. LonchaNet: A sliced-based CNN architecture for real-time 3D object recognition"

[32] Asako Kanezaki, Yasuyuki Matsushita and Yoshifumi Nishida. RotationNet: Joint Object Categorization and Pose Estimation Using Multiviews from Unsupervised Viewpoints. CVPR, 2018.

[33] L. Minto ,P. Zanuttigh, G. Pagnutti Deep Learning for 3D Shape Classification Based on Volumetric Density and Surface Approximation Clues, International Conference on Computer Vision Theory and Applications (VISAPP), 2018

[34] J. Li, B. M. Chen, G. H. Lee SO-Net: Self-Organizing Network for Point Cloud Analysis. CVPR2018

[35] Jianwen Xie, Zilong Zheng, Ruiqi Gao, Wenguan Wang, Song-Chun Zhu, and Ying Nian Wu, Learning Descriptor Networks for 3D Shape Synthesis and Analysis. CVPR 2018

[36] Manzil Zaheer, Satwik Kottur, Siamak Ravanbhakhsh, Barnabás Póczos, Ruslan Salakhutdinov1, Alexander J Smola, Deep Sets. NIPS 2018

[37] Chao Ma, Wei An, Yinjie Lei, Yulan Guo. BV-CNNs: Binary volumetric convolutional neural networks for 3D object recognition. BMVC2017.

Chao Ma, Yulan Guo, Yinjie Lei, Wei An. Binary volumetric convolutional neural networks for 3D object recognition. IEEE Transactions on Instrumentation & Measurement.

[38]Yaoqing Yang, Chen Feng, Yiru Shen, Dong Tian, FoldingNet: Point Cloud Auto-encoder via Deep Grid Deformation. CVPR 2018

[39] Yiru Shen, Chen Feng, Yaoqing Yang and Dong Tian Mining Point Cloud Local Structures by Kernel Correlation and Graph Pooling. CVPR 2018

[40] Ryan Lambert, Capsule Nets for Content Based 3D Model Retrieval

[41] Shikun Liu, C. Lee Giles, and Alexander G. Ororbia II. Learning a Hierarchical Latent-Variable Model of 3D Shapes 3DV 2018

[42] Miguel Dominguez,Rohan Dhamdhere,Atir Petkar,Saloni Jain, Shagan Sah, Raymond Ptucha, General-Purpose Deep Point Cloud Feature Extractor. WACV 2018.

[43]Zhizhong Han, Mingyang Shang, Zhenbao Liu, Chi-Man Vong, Yu-Shen Liu, Junwei Han, Matthias Zwicker, C.L. Philip Chen. SeqViews2SeqLabels: Learning 3D Global Features via Aggregating Sequential Views by RNN with Attention.. IEEE Transactions on Image Processing, 2019, 28(2): 658-672.

[44] Jong-Chyi Su, Matheus Gadelha, Rui Wang, and Subhransu Maji. A Deeper Look at 3D Shape Classifiers . Second Workshop on 3D Reconstruction Meets Semantics, ECCV 2018.

[45] Kripasindhu Sarkar, Basavaraj Hampiholi, Kiran Varanasi, Didier

Stricker, Learning 3D Shapes as Multi-Layered Height-maps using 2D

Convolutional Networks ECCV 2018.

[46] Yifan Feng, Zizhao Zhang, Xibin Zhao, Rongrong Ji, Yue Gao.

GVCNN: Group-View Convolutional Neural Networks for 3D Shape Recognition CVPR 2018.

[47] Haoxuan You, Yifan Feng, Rongrong Ji, Yue Gao.

PVNet: A Joint Convolutional Network of Point Cloud and

Multi-View for 3D Shape Recognition ACM MM 2018.

[48] Xinwei He, Yang Zhou, Zhichao Zhou, Song Bai, and Xiang Bai,.

Triplet-Center Loss for Multi-View 3D Object Retrieval, CVPR 2018

[49]Xinhai Liu, Zhizhong Han, Yu-Shen Liu, Matthias Zwicker.

Point2Sequence: Learning the Shape Representation of 3D Point Clouds with an Attention-based Sequence to Sequence Network. AAAI 2019.

[50]Zhizhong Han, Mingyang Shang, Yu-Shen Liu, Matthias Zwicker.

View Inter-Prediction GAN: Unsupervised Representation Learning for 3D Shapes by Learning Global Shape Memories to Support Local View Predictions. AAAI 2019.

[51] Yu, Tan, Jingjing Meng, and Junsong Yuan. "Multi-view Harmonized Bilinear Network for 3D Object Recognition." CVPR 2018.

[52] Mohsen Yavartanoo, Eu Young Kim, Kyoung Mu Lee, SPNet: Deep 3D Object Classification and Retrieval using Stereographic Projection, ACCV2018.

[53] Yifan Feng, Haoxuan You, Zizhao Zhang, Rongrong Ji, Yue Gao, Hypergraph Neural Networks. AAAI2019.

[54] Zizhao Zhang, Haojie Lin, Xibin Zhao, Rongrong Ji, and Yue Gao, Inductive Multi-Hypergraph Learning and Its Application on View-Based 3D Object Classification. Transactions on Image Processing. 2018.

[55] Jianwen Jiang, Di Bao, Ziqiang Chen, Xibin Zhao, Yue Gao MLVCNN: Multi-Loop-View Convolutional Neural Network for 3D Shape RetrievalAAAI 2019

[56] Chao Ma, Yulan Guo*, Jungang Yang, Wei An. Learning Multi-view Representation with LSTM for 3D Shape Recognition and Retrieval. IEEE Transactions on Multimedia, 2018.IEEE Transactions on Multimedia, 2018.

[57] Hamidreza Kasaei OrthographicNet: A Deep Learning Approach for 3D Object Recognition in Open-Ended Domains. arXiv 2019.

[58] Zhizhong Han, Honglei Lu, Zhenbao Liu, Chi-Man Vong, Yu-Shen Liu, Matthias

Zwicker, Junwei Han, C.L. Philip Chen. 3D2SeqViews: Aggregating Sequential Views for 3D Global Feature Learning by CNN with Hierarchical Attention Aggregation. IEEE Transactions on Image Processing, 2019

[59] A. Cheraghian and L. Petersson, 3DCapsule: Extending the Capsule Architecture to Classify 3D Point Clouds, 2019 IEEE Winter Conference on Applications of Computer Vision (WACV), 2019, pp. 1194-1202.

[60] Salman H. Khan, Yulan Guo, Munawar Hayat, Nick Barnes, "Unsupervised Primitive Discovery for Improved 3D Generative Modeling" CVPR 2019.

[61] Kuangen Zhang, Ming Hao, Jing Wang, Clarence W. de Silva, Chenglong Fu, Linked Dynamic Graph CNN: Learning on Point Cloud via Linking Hierarchical Features. arXiv.

[62] Sudhakar Kumawat and Shanmuganathan Raman, LP-3DCNN: Unveiling Local Phase in 3D Convolutional Neural Networks. CVPR 2019.

[63]Yongcheng Liu, Bin Fan, Shiming Xiang, Chunhong Pan. Relation-Shape Convolutional Neural Network for Point Cloud Analysis. CVPR 2019

Download Full Dataset

Please email Shuran Song to obtain the Matlab toolbox for downloading.

Citation

If you find this dataset useful, please cite the following paper:

Copyright

All CAD models are downloaded from the Internet and the original authors hold the copyright of the CAD models.

The label of the data was obtained by us via Amazon Mechanical Turk service and it is provided freely.

This dataset is provided for the convenience of academic research only.